Chapterising audiobooks

13 Apr 2020

So today is Easter Sunday and we are still on lockdown.

I could have spent the weekend building an API client, polishing my CV or make progress on the 30-day LeetCode challenge.

Instead I decided to go for my government-sanctioned walk.

Going for these long walks by myself can get a bit monotonous, so I wanted to listen to an audiobook. However, the issue was that I had an mp3 file that was 12 hours of continuous audio. I had never bothered to understand why my iOS Music app or iTunes couldn’t remember the current position once I paused or stopped it so I decided to apply some “engineering thinking” to this problem. I had come up with two initial approaches which I cross-validated with Reddit.

Estimation approach

The first one involved finding the table of contents for the book and getting a sense for how many pages each chapter is. Then assuming an average narration speed of about 2 minutes I could get the timings for each chapter. I could then skip to each part and use Audacity, QuickTime or iTunes to split the file into parts.

After spending about 5 to 10 minutes on finding the table of contents and failing I decided to go with my second approach.

AI approach

This second approach involved finding a library on Github which would help me analyse audio files for occurrences of specific words. After a few minutes of searching, I discovered SpeechRecognition. It was essentially an interface for many different audio processing APIs. The only offline API was provided by a library developed at Carnegie Mellon University called CMUSphinx. According to their blog it has been superseded by BERT, Wavenet and others. I chose to use it as it was way simpler at first glance.

After some further Googling and going on StackOverflow I had my process down:

- I would convert the into a 16khz mono file to enable processing

ffmpeg -i file.mp3 -ar 16000 -ac 1 file.wav - I would build and install the latest version of the a lightweight recognizer library written in C called

pocketsphinxlibrary.brew tap watsonbox/cmu-sphinx brew install --HEAD watsonbox/cmu-sphinx/cmu-pocketsphinxIf you’re on a Mac using

Homebrewshould work without any hiccups. The alternative is to try to compile the library from source, which can be a bit more painful as there are all of these transative dependencies and you have to make sure the directory where you cloned the library is calledpocketsphinx. - Download the latest modified language model in my case for US English.

- The run the detection

pocketsphinx_continuous -infile file.wav -hmm ~/Downloads/en-us -kws_threshold 1e-10 -keyphrase "chapter" -time yesHere is an overview of the flags:

-infile Audio file to transcribe. -hmm Directory containing acoustic model files. -kws_threshold Threshold for p(hyp)/p(alternatives) ratio -keyphrase Keyphrase to spot -time Print word times in file transcription.

After finally congfiguring everything, I ran the model it wasn’t as accurate as I hoped. Realised it would have taken at least 40 minutes to complete it was time to abandon this approach. It was a good learning experience!

Okay so I still hadn’t partitioned this large mp3 into smaller more managable pieces. It was time to use QuickTime and simply trim the audio to my liking!

QuickTrim approach

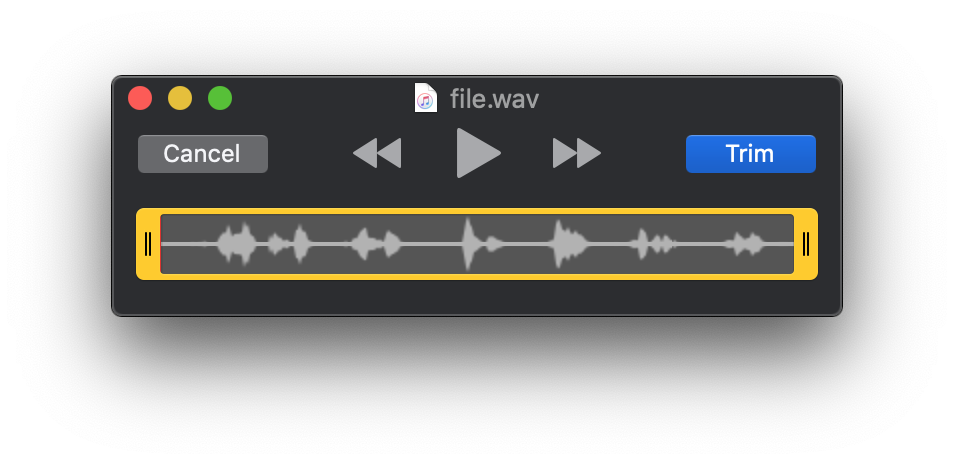

Simply open the file in QuickTime Player or any audio editor.

In QuickTime Edit > Trim opens the editor menu as seen below

All you need to do is spot the pauses and trim up to that point. It’s not perfect but you would end up with smaller pieces of content that you can consume in one session.

Obvious approach

What I could have done from the start is simply Google for my issue.

I would have quickly discovered that there is an option to persist the last playback position.

While playing the audiobook simply select Edit/Song Info/Options>Remember Playback Position (and tick the box).